From Experimentation to Production: The 2025 Guide to Enterprise AI Model Selection

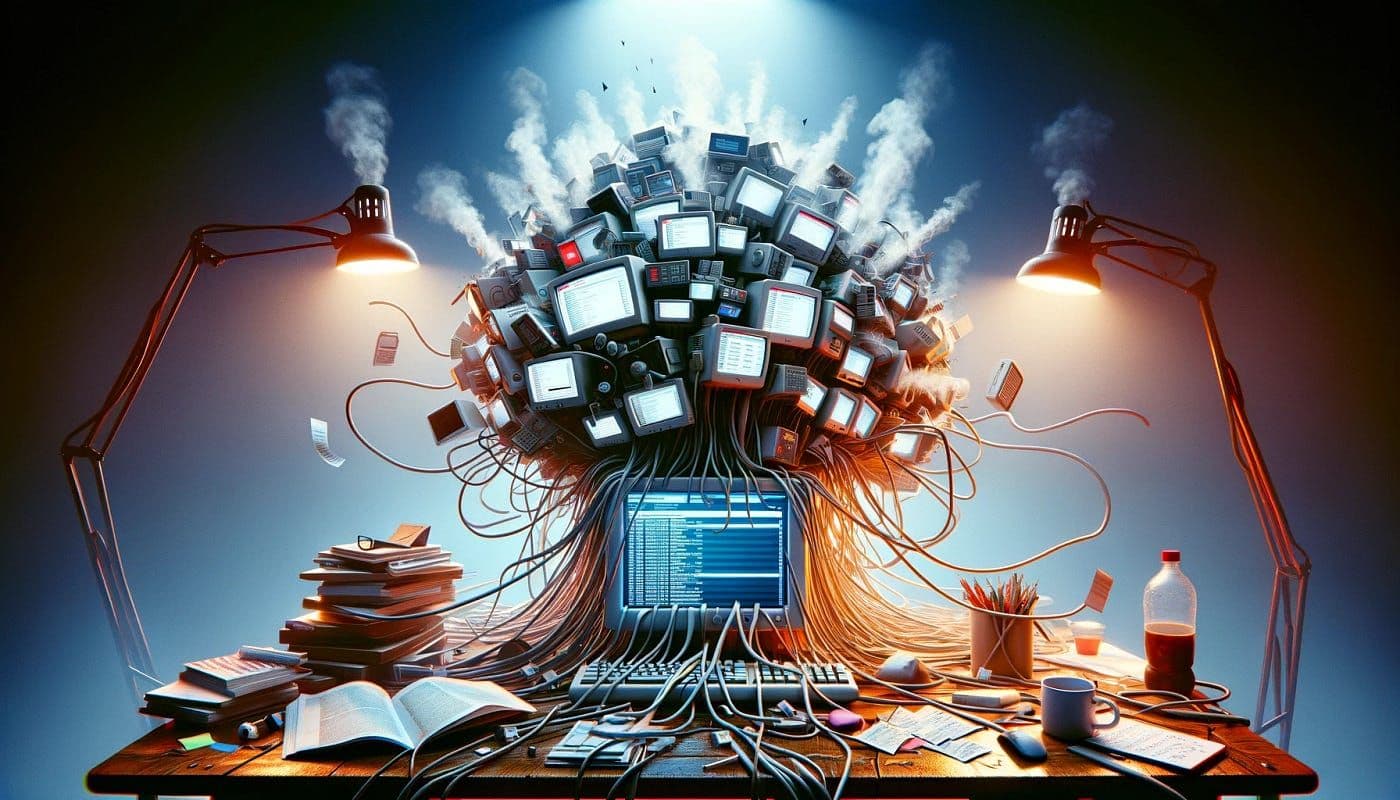

The era of deploying just one AI model is over. According to a recent survey, 37 % of enterprises now run five or more large language models (LLMs) in production, up from 29 % last year. As generative AI matures, selecting the right mix of models has become a strategic necessity.

Why Multiple Models Matter

Enterprises are adopting a multi-model strategy for a few key reasons:

- Use-case fit: Different models excel at different tasks. For example, Claude shines in creative writing, Gemini in data analysis, and OpenAI’s GPT‑4o in deep reasoning.

- Avoiding lock-in: Relying on one provider increases risk. A diversified model stack offers flexibility and resilience .

- Cost-performance balance: Paying premium per-token isn’t efficient for every task. Using smaller or open‑source models (now embraced by more larger enterprises) helps manage costs.

In 2024, enterprises began shifting AI from experimental R&D into mainstream operations. Strategic deployments are now often budgeted directly within IT, signaling maturity.

Key Steps to Choose AI Models Wisely

A smart model selection strategy includes three steps:

- Define the use case Start by understanding requirements. Is your goal drafting copy, answering questions, coding, analyzing data, or real-time interaction? Each need demands different capabilities.

- Assess trade-offs Look at performance, cost, latency, reliability, and safety. Frameworks like Credo AI’s Model Trust Score help evaluate models based on context-specific factors. Think speed, affordability, and security.

- Implement routing and orchestration Use techniques like ensemble learning, model cascades, or routers to dynamically assign tasks to the best-fit model. Cutting-edge systems like EMAFusion route queries to optimize accuracy and cost achieving up to 4× savings with better performance.

What’s Ahead in 2025

As AI budgets grow (Gartner projects AI spending to increase by nearly 6 % this year) and infrastructure stabilizes , enterprises will need robust ModelOps, tools for lifecycle management, monitoring, retraining, and compliance.

Expect to see: more domain-specific fine-tuned models, dynamic multi-model pipelines, and AI governance tools that balance innovation with risk control. Teams that treat model choice as a core competitive advantage rather than a one‑time implementation will lead the way.

Bottom line: AI in 2025 isn’t about picking “the best” model. It’s about building a responsive, multi-model ecosystem that matches models to missions, delivers quality at scale, and evolves with new advances.