OpenAI released its advanced voice mode to more people. Here’s how to get it.

Chat GPT new Voice A.I

OpenAI has begun expanding access to its Advanced Voice Mode in ChatGPT, giving users the ability to interact with the AI more naturally than ever. This feature allows users to interrupt the AI’s responses mid-sentence and even respond to the emotion or tone in your voice. Initially introduced back in May with the release of GPT-4o, the feature was only available to a select group of invitees until its official launch in July.

While there were some early safety concerns (leading to the feature being briefly pulled in May), OpenAI is now rolling out this highly anticipated voice mode to a wider audience.

What’s New in Advanced Voice Mode?

Although the standard voice mode has been available for some time to ChatGPT Plus users, it has its limitations. For instance, users couldn't verbally interrupt responses and had to tap the screen to stop the AI. Advanced Voice Mode changes that by letting you speak over the AI if you need to redirect or stop a response.

Additionally, this new version takes voice interactions to a new level by sensing emotional cues in your voice and adjusting its responses to better fit the tone of the conversation. This feature builds on ChatGPT’s ability to remember details you’ve shared and personalize interactions accordingly. It’s also more adept at pronouncing words in non-English languages, making the conversation smoother and more natural.

A demonstration of the tool shared by AI investor Allie Miller in August showcased the model’s ability to shift its tone, accent, and even content depending on the situation, which has impressed early users.

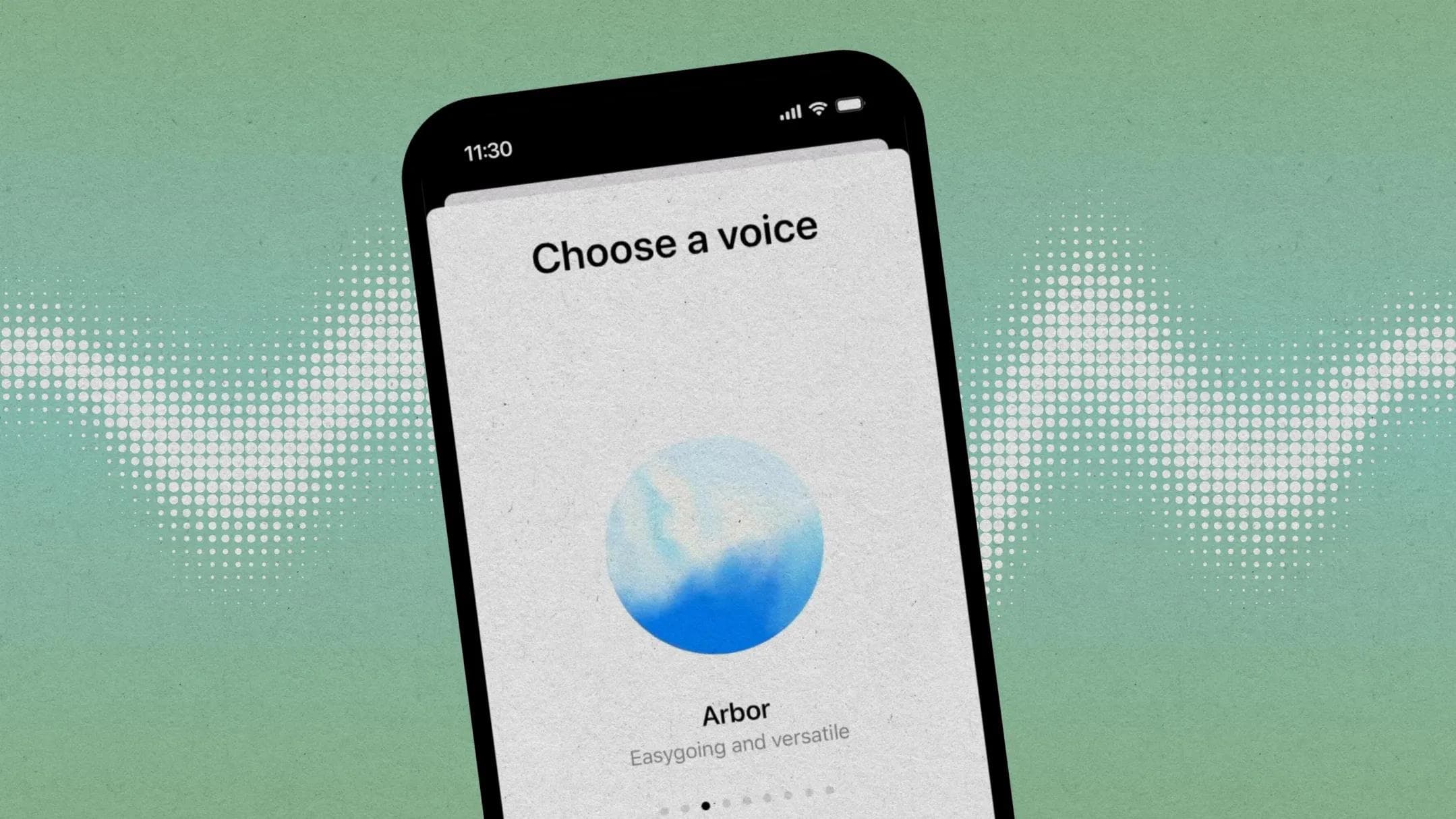

New Voices and Personalization

Following some early criticisms about voice similarity to known actors (such as Scarlett Johansson’s AI role in Her), OpenAI has now introduced five new voices: Arbor, Maple, Sol, Spruce, and Vale. According to OpenAI, these voices were created using professional actors from around the globe, selected for their warmth, approachability, and dynamic tones, making them enjoyable to interact with for extended conversations.

Who Has Access to Advanced Voice Mode?

Right now, OpenAI is rolling out this new voice mode to its Plus and Team subscribers, who pay $20 and $30 per month, respectively. These users are the first to try out the upgraded feature, but OpenAI plans to extend access to its Enterprise and Education customers soon. The exact timeline remains vague, but Plus users are expected to have full access by the end of fall.

However, there are some geographical restrictions. Users in the EU, UK, Switzerland, Iceland, Norway, and Liechtenstein don’t yet have access to the new voice mode. Also, OpenAI has not announced any plans to bring Advanced Voice Mode to free users, though the standard voice mode remains available for all paid users.

Ensuring Safety

As part of its launch, OpenAI has emphasized the safety of Advanced Voice Mode. The company worked with external experts from 29 different regions, who collectively speak 45 languages, to ensure the system meets high safety standards. OpenAI’s safety checks include preventing the AI from generating harmful, violent, or inappropriate content and from mimicking real people’s voices without permission.

Despite these efforts, OpenAI’s models remain closed-source, meaning independent researchers have less access to evaluate the model’s safety and biases compared to open-source alternatives. Nonetheless, the company continues to prioritize trust and safety in this rollout.

Conclusion

OpenAI’s new Advanced Voice Mode offers a more dynamic, natural, and personalized conversation experience. As it becomes more widely available, users can look forward to a significant improvement in how they interact with AI, making conversations feel more like speaking with a real person.

For now, it’s accessible to paid subscribers, but the excitement is building as OpenAI plans to expand access in the coming months.